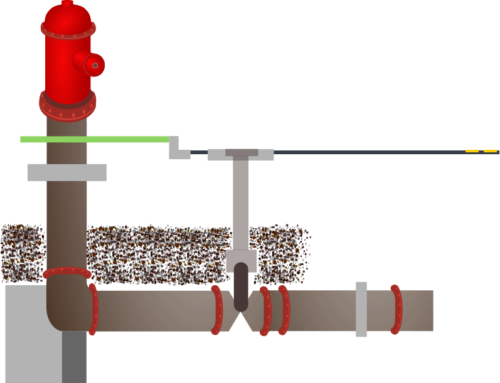

In this case study we are going through the process of designing a database for storing the information contained in an input file for EPANET, software which can analyse water supply distribution networks.

The last post waded through the definition of the physical data of our spring-tunnel-pool model. We put all the information into an EPANET data file, and this post starts to look at how we could store the same information in a database.

Our task of defining the necessary data is made much easier by the fact that we are working with an input file format that has been specified for us. All we have to do is match the definitions and see how they translate into database tables. In this first step, we will assume that each node type (reservoirs, tanks and junctions) will be stored in a separate table.

The simplest item is the reservoir, which represents the Gihon Spring. Two posts ago, the requirements for our data records were listed, and they are included below in the description of each column as we define our RESERVOIR table:

| Column name | Data type | Nullable? | Description |

|---|---|---|---|

| ID | Character / Key | No | ID label (up to 31 characters (letters and digits)) |

| HEAD | Floating point | No | Head (m or ft) |

| PATTERN_ID | Character /Foreign key | Yes | Head pattern ID (optional) |

These are the columns that represent the data required by the EPANET analysis engine. However, we now have some choices to make.

Identifiers

The interactive EPANET application allows the user to initially choose and later change the IDs of objects in the model. Now these IDs are unique identifiers of each object, which in database parlance we might call primary keys. But as we mentioned in an earlier newsletter, this uniqueness can spread over several classes: each node (reservoir, tank or junction) must have a different ID from all other nodes. So now we have to answer a common question: Do we want to enforce this uniqueness? In our example, we are modelling the data in an input file. On either side of this data file, we may have a tool which will do the checking for us. The EPANET interactive software enforces various uniqueness checks, and the EPANET analysis engine does the same, as well as further checking of the relationships between objects. If we were, for some reason, simply creating a tool that would read an input file and write the information to database tables, or a tool that would read the database tables and write out an input file, then we may have no need of any form of checking of IDs or relationships. Others will do the checking.

This highlights again one of the fundamental issues in data modelling: our modelling depends on what we need. What do we need for IDs in our modelling?

Here are some of the options:

- Store IDs as strings of characters. No uniqueness checking. No relationship checking. No validation at all. Assume our data is valid and leave it to others to do the checking.

- Store a separate unique key to keep a relational modeller like Django happy, but treat our ID fields just the same as any other character field with no checking.

- Store IDs as keys which must be unique in each table or add a uniqueness constraint to each table to give some protection against duplication. However, don’t bother checking for duplication between different types of nodes (reservoirs, tanks and junctions).

- Add a custom constraint which enforces uniqueness of IDs across the different node tables.

- Have a separate NODE table which stores the ID as a primary key along with any other shared attributes. A separate table for each of the node types would then store the attributes that are not shared. This requires four tables rather than three.

There are other options available too, but these give us enough to think about and help to set up a process for decision making.

Our fundamental question is: What is the simplest modelling that will do what we need?

If we are storing data that has already been checked, or feeding data to something that will do the checking for us, don’t do it again. In this situation, just store IDs as simple strings of characters and don’t bother with any constraints. Constraints generate errors; errors need handling.

I remember a project many years ago which involved several companies working together on a database application. One company had responsibility for or “control” over the database and spent many months working on complex triggers and constraints to protect the database from corruption. Another company – the one I worked for – had responsibility for manipulating the data that actually made the database valuable to the customer. The last and most important step of manipulating this data included modifying the primary keys of many rows in many tables. Now, practically, there is no reason why this should not be done, but conceptually, many practitioners think of primary keys as being utterly unchangeable. So when the data manipulation process reached its final stage and attempted to tweak the primary keys as necessary, all sorts of errors resulted. I remember the silence on the phone after I explained the situation. It was a lengthy silence, followed by, “But you can’t do that! It’s just wrong!” Significant work was required to remove the “protection” and allow the necessary data manipulation.

Obviously, not checking data can cause major problems, however, checking can become an obsession and cause just as many problems. We all make mistakes, and the more complex a solution, the more likely it is to contain mistakes.

In this case, if the need is just a database and we are not using any special tools to write to it, the best solution is option 1. Store keys as strings of characters with no constraints at all.

If you just need a database but are using an object relational mapper to write to or read from the database, options 2 or 3 will be best. Option 3 will be simpler.

If you are wanting a database that can be directly used by an input file editor or a network editor that you are developing, you will need to be doing the checking of uniqueness yourself, and it may be easiest to use the database to do it. In this case, you would want something like options 4 or 5. I would use option 5.

For our example, we will take option 1 as the simplest possible solution, but let’s also note that moving from option 1 to other options would not be difficult if it proved to be necessary later. However, in the meantime, the beauty of simplicity will make everything easier.

One more field…

Three posts ago, we added a table for storing the input filename along with a unique ID so that all the information related to that network would be tied together. Our RESERVOIR table needs a column to store the ID from the NETWORK table.

Our table now looks like this:

Table RESERVOIR

| Column name | Data type | Nullable? | Description |

|---|---|---|---|

| NETWORKID | Foreign key | No | ID of the network in the NETWORK table |

| ID | Character(31) | No | ID label (up to 31 characters (letters and digits)) |

| HEAD | Floating point | No | Head (m or ft) |

| PATTERNID | Character(31) | Yes | Head pattern ID (optional) |

Database Rows

For our single reservoir, the row we would need is shown below (in metric units):

Table RESERVOIR

| NETWORKID | ID | HEAD | PATTERNID |

|---|---|---|---|

| 1 | GihonSpring | 636.0 |

In Imperial/US units, the necessary row would be:

Table RESERVOIR

| NETWORKID | ID | HEAD | PATTERNID |

|---|---|---|---|

| 1 | GihonSpring | 2087 |

The next post will examine the other object types and the questions they raise.

Leave A Comment