Last week in our modelling case study we walked a little farther down the path of providing a database in which we could store the information from an EPANET input file.

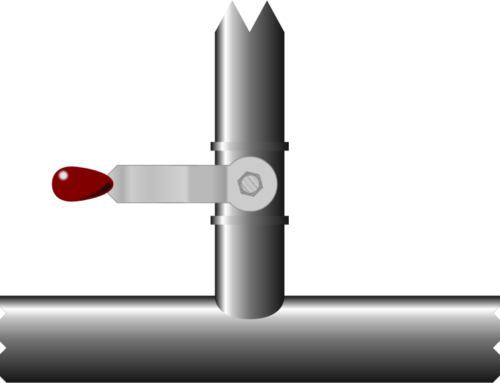

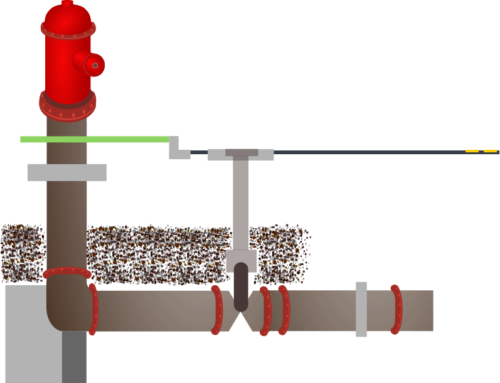

This week, we finish our look at this example, but before we tie off the threads, let’s remember that the EPANET analysis is a simulation of a water supply network. Our network example is the very simplest of networks, set up for us more than 2,700 years ago by King Hezekiah of Israel: Gihon Spring, Hezekiah’s Tunnel and the Pool of Siloam.

In an earlier issue, we mentioned the variable flow that used to come from the spring, with an extra surging flow that recurred every few hours in the wet season, but only once a day or even less often during dry times. As a simulation, EPANET allows various times to be set: duration, time steps for the simulation, and other control variables.

As you might expect, these settings are included in a file section with the heading “[TIMES]”. EPANET defines the possible settings as shown in the table below. The meaning of these different entries is not important, and the example shows most clearly the different formats that can be used to indicate times.

| Time setting | Content or possible values |

|---|---|

| DURATION | value (units) |

| HYDRAULIC TIMESTEP | value (units) |

| QUALITY TIMESTEP | value (units) |

| RULE TIMESTEP | value (units) |

| PATTERN TIMESTEP | value (units) |

| PATTERN START | value (units) |

| REPORT TIMESTEP | value (units) |

| REPORT START | value (units) |

| START CLOCKTIME | value (AM/PM) |

| STATISTIC | NONE/AVERAGED/MINIMUM/MAXIMUM/ RANGE |

Times Example

[TIMES]

DURATION 240 HOURS

QUALITY TIMESTEP 3 MIN

QUALITY TIMESTEP 0:03

REPORT START 120

START CLOCKTIME 6:00 AM

Notes

- Units can be SECONDS (SEC), MINUTES (MIN), HOURS, or DAYS. The default is hours.

- If no units are supplied, then time values can be expressed in either decimal hours or hours:minutes notation.

- All entries in the [TIMES] section are optional.

As can be seen from the example above, there are many different ways that these times can be expressed. In our case study we are looking for a way to store this information.

We go back to our standard approach to modelling: What do we need to store? How much “intelligence” do we want/need in the information?

A few classes of option exist.

- Option 1: Store the text of each line read with no processing

- Option 2: Split the line into pieces to store the components

- Option 3: Store the text with no processing, but provide tools for extracting information from the raw data.

Option 1

This is the simplest option and the point from which we should start, since we are assuming that our data is checked before we receive it. The questions that must be asked are “Is the information useful in this format? Could a different abstraction give a greater benefit?” Imagine that we had stored the information from several hundred input files in our database and we wanted to find how many of these had simulation durations of more than two days. How would we find the answer?

We could use pattern matching (regular expressions) to extract the information we need from [TIMES] entries. However, this would be prone to error if we had to craft specific regular expressions each time we wanted to use the information.

If this sort of question must be answered from the stored data, storing each row as raw, unprocessed data is probably not the best solution.

Option 2

Applying some processing to the data when reading the data file allows the information to be easily available. Note that deciding how to dissect the data would not be easy: there are many different timing options and the values can be specified in several different formats.

Reading the information from an input file becomes more complex, as does writing to an input file. However, on the other side of the ledger, the data has more easily available value.

Option 3

The final option is to store the data as described in Option 1, but to also provide tools for massaging it into a format that is as usable as Option 2 would make it. Reading the data remains easy, as does the writing of it.

Tools could be provided in the form of database views which encapsulate pattern matching and present the results in the same sort of format as Option 2 would have done.

Alternatively, one or more stored procedures or functions could take a [TIMES] entry and return the value or values found in it.

Selected option

The best option is not easy to choose, since it depends on the detailed usage requirements. In all likelihood, Option 3 will be the best as long as the number of rows in the TIME table is not too great. The table would look like this:

Table TIME

| Column name | Data Type | Description |

|---|---|---|

| NETWORKID | Foreign key | ID of project |

| LINE | Character(255) | Line read from input file |

A database view called TIME_VIEW could be created which uses pattern matching to provide extra columns for DURATION, HYDRAULIC_TIMESTEP, etc.

Conclusion

This extra detail on times concludes the very basic modelling of the EPANET input file for our case study. Much still remains to be modelled if the job was to be done thoroughly, but we have been able to develop a model which allows us to describe our very simple network and consider various useful modelling lessons in the process.

If you enjoyed this modelling exercise, you may like to think of what the differences would be if you were trying to create a network editing program which could read an EPANET input file, edit the network it represents and write an input file to feed to the EPANET analysis engine. I have done this in working on another project of mine – WaterSums – and it raises some quite different questions. If you are interested, feel free to contact me about it.

Leave A Comment